Free Software Applications

Applications for tinyrocs. All Free Software.

pytorch

pytorch and applications built upon it.

Install

For now, use Pytorch 2.3 development build for ROCm 5.7. This may work ok for now. Perhaps rebuild for ROCm 6.0, but may not be supported yet.

# pip install --pre torch torchvision torchaudio --index-url https://download.pytorch.org/whl/nightly/rocm5.7

pip install --pre torch torchvision torchaudio --index-url https://download.pytorch.org/whl/nightly/rocm6.0

Verify

Script to verify HIP Pytorch installation working with all GPUs:

#!/usr/bin/env python3

import torch

print("Pytorch Version: ", torch.__version__)

print("Torch HIP Version: ", torch.version.hip)

print("Torch CUDA Version: ", torch.version.cuda)

print("Torch CUDA arch: ", torch.cuda.get_arch_list())

i=int(0)

while i <= 16:

try:

torch.cuda.set_device(i)

d=str(i)

print(("GPU Device " + d + ": "), end="")

print(torch.cuda.is_available())

i += 1

except:

exit()

Parrot

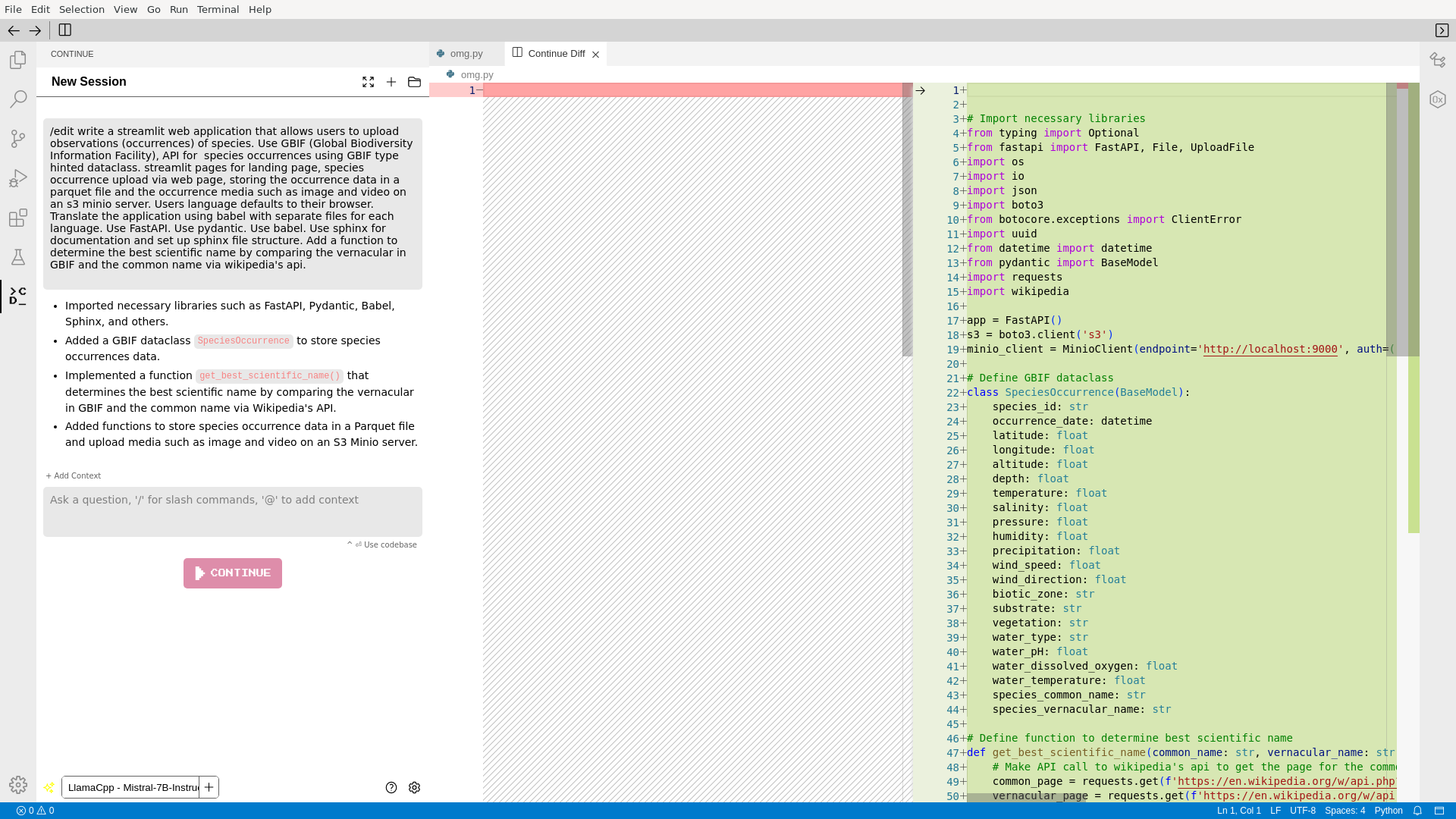

tinyrocs is being built so it may, perchance, create fully libre models for Parrot.

Parrot is a self-hosted Libre AI IDE for stochastic humans.

Documentation:

An IDE is an editor. It is a software application that makes it easy for humans to edit computer source code.

AI adds “artificial intelligence” to the application, which may help the human write code.

Libre means all of the source code is under a free software license, as defined by the Free Software Foundation. Examples: GPL, MIT.

Libre means all of the AI models are under a free content license compatible with Wikipedia. Example: CC by SA, public domain.

Note

Parrot is in early development, not ready for end users.

llama.cpp

Nice inference server.

# ubuntu

git clone --recursive https://github.com/ggerganov/llama.cpp

cd llama.cpp/

rm -rf build

cmake -B build -G Ninja \

-DCMAKE_BUILD_TYPE=Release \

-DCMAKE_CXX_COMPILER=/opt/rocm/llvm/bin/clang++ \

-DCMAKE_C_COMPILER=hipcc \

-DCMAKE_C_COMPILER_AR=/opt/rocm/lib/llvm/bin/llvm-ar \

-DCMAKE_AR=/opt/rocm/lib/llvm/bin/llvm-ar \

-DCMAKE_ADDR2LINE=/opt/rocm/lib/llvm/bin/llvm-addr2line \

-DCMAKE_C_COMPILER_RANLIB=/opt/rocm/lib/llvm/bin/llvm-ranlib \

-DCMAKE_LINKER=/opt/rocm/lib/llvm/bin/ld.lld \

-DCMAKE_NM=/opt/rocm/lib/llvm/bin/llvm-nm \

-DCMAKE_OBJCOPY=/opt/rocm/lib/llvm/bin/llvm-objcopy \

-DCMAKE_OBJDUMP=/opt/rocm/lib/llvm/bin/llvm-objdump \

-DCMAKE_RANLIB=/opt/rocm/lib/llvm/bin/llvm-ranlib \

-DCMAKE_READELF=/opt/rocm/lib/llvm/bin/llvm-readelf \

-DCMAKE_STRIP=/opt/rocm/lib/llvm/bin/llvm-strip \

-DLLAMA_AVX2=ON \

-DLLAMA_AVX=ON \

-DLLAMA_HIPBLAS=ON \

-DLLAMA_FMA=ON \

-DLLAMA_LTO=ON \

-DLLAMA_HIP_UMA=OFF \

-DLLAMA_QKK_64=OFF \

-DLLAMA_VULKAN=OFF \

-DLLAMA_F16C=ON \

-DAMDGPU_TARGETS=gfx1100

ninja -C build

# Debian

git clone --recursive https://github.com/ggerganov/llama.cpp

cd llama.cpp/

rm -rf build

cmake -B build -G Ninja \

-DCMAKE_BUILD_TYPE=Release \

-DCMAKE_CXX_COMPILER=/opt/rocm/llvm/bin/clang++ \

-DCMAKE_C_COMPILER=hipcc \

-DCMAKE_C_COMPILER_AR=/usr/bin/llvm-ar \

-DCMAKE_AR=/usr/bin/llvm-ar \

-DCMAKE_ADDR2LINE=/usr/bin/llvm-addr2line \

-DCMAKE_C_COMPILER_RANLIB=/usr/bin/llvm-ranlib \

-DCMAKE_LINKER=/usr/bin/ld.lld \

-DCMAKE_NM=/usr/bin/llvm-nm \

-DCMAKE_OBJCOPY=/usr/bin/llvm-objcopy \

-DCMAKE_OBJDUMP=/usr/bin/llvm-objdump \

-DCMAKE_RANLIB=/usr/bin/llvm-ranlib \

-DCMAKE_READELF=/usr/bin/llvm-readelf \

-DCMAKE_STRIP=/usr/bin/llvm-strip \

-DLLAMA_AVX2=ON \

-DLLAMA_AVX=ON \

-DLLAMA_HIPBLAS=ON \

-DLLAMA_FMA=ON \

-DLLAMA_LTO=ON \

-DLLAMA_HIP_UMA=OFF \

-DLLAMA_QKK_64=OFF \

-DLLAMA_VULKAN=OFF \

-DLLAMA_F16C=ON \

-DAMDGPU_TARGETS=gfx1100 \

-DLLAMA_LTO=OFF

ninja -C build

Misc

Noted.

export PYTORCH_ROCM_ARCH="gfx1100"

export HSA_OVERRIDE_GFX_VERSION=11.0.0